AI and Cybersecurity: Promises and Pitfalls

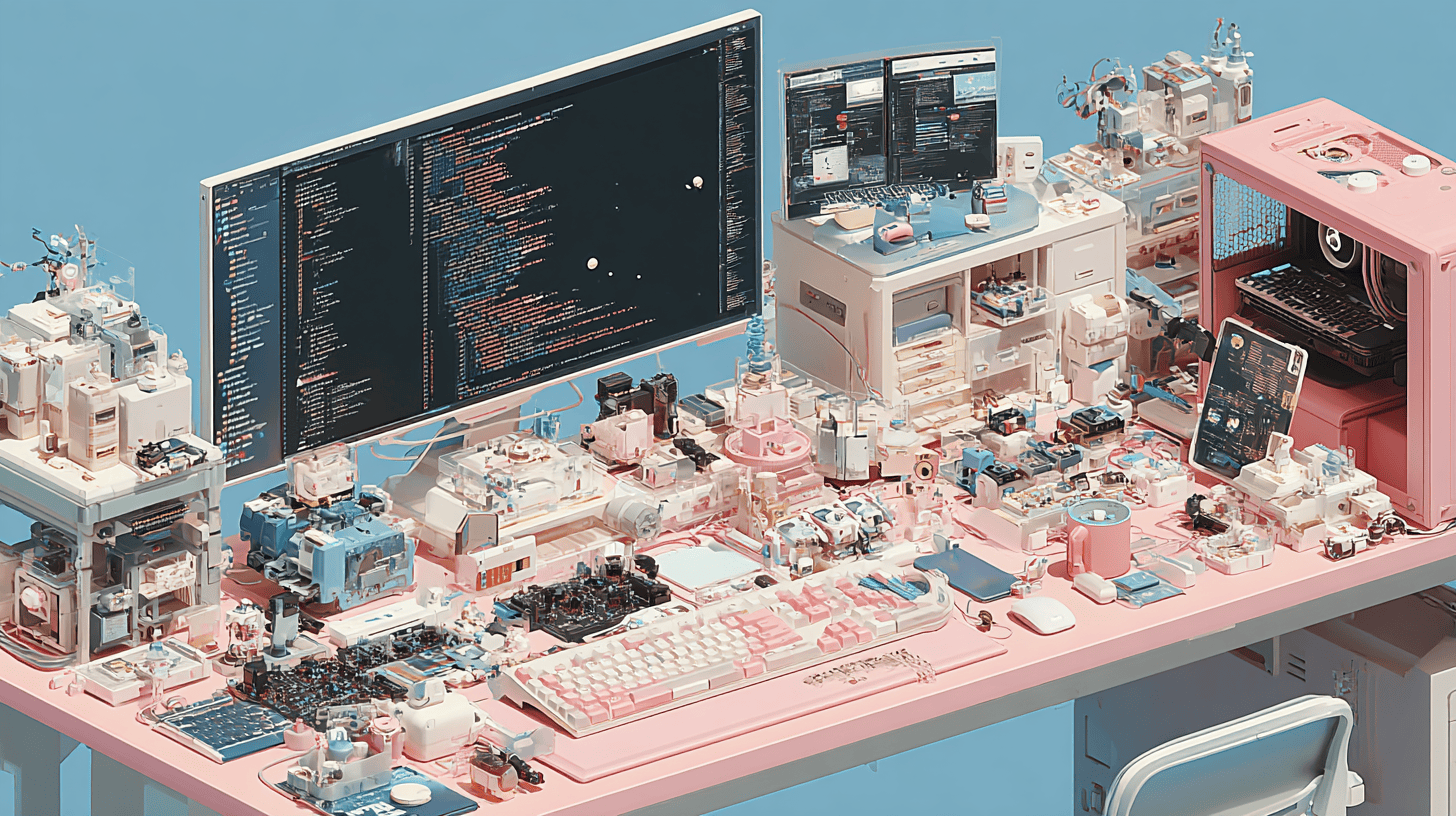

Artificial Intelligence (AI) is rapidly transforming the cybersecurity landscape. As threats grow more complex, organizations are turning to AI-powered tools to help detect, analyze, and respond to attacks faster than ever before. But with great promise comes great responsibility — and a few dangerous pitfalls.

The Promises of AI in Cybersecurity

- Faster Threat Detection: AI can process vast amounts of data in real time to identify patterns, anomalies, or unusual behavior that might indicate a cyber attack. Tools like anomaly detection and behavioral analysis are powered by machine learning algorithms trained on massive datasets.

- Automated Incident Response: With AI, security systems can automatically contain threats, quarantine infected machines, or alert the right teams — all in seconds. This reduces reaction time and mitigates damage.

- Predictive Defense: Predictive models can anticipate likely attack vectors based on past incidents and threat intelligence. This proactive stance allows teams to strengthen defenses before an attack occurs.

- Reduced False Positives: Traditional rule-based systems often generate noise. AI models trained on real-world data can filter out benign activity and focus on high-confidence alerts, reducing alert fatigue for security teams.

The Pitfalls of Relying on AI

- Adversarial Attacks: Cybercriminals are learning how to manipulate or deceive AI systems using adversarial inputs — data crafted to confuse machine learning models. An AI that’s not well-protected can become a new attack surface.

- Bias and Blind Spots: AI is only as good as the data it's trained on. If training data lacks diversity or is biased, the AI might overlook certain threats or misclassify legitimate behavior as malicious.

- Over-Reliance and Complacency: Trusting AI blindly can be dangerous. AI should augment human decision-making — not replace it. Human oversight is still essential to validate findings and adapt to new, unpredictable threats.

- Data Privacy Concerns: Training and operating AI models often require access to large amounts of data, including sensitive logs and user activity. Mishandling this data can create privacy and compliance risks.

Real-World Use Cases

Many companies today use AI-driven platforms to enhance their cybersecurity posture:

- SIEMs with AI modules can correlate events and suggest possible breaches based on cross-data analysis.

- EDR (Endpoint Detection and Response) solutions use AI to detect unusual process behavior or file access patterns.

- Email filtering tools now use NLP-based models to detect phishing, fraud, and impersonation attempts.

At Cyber Dream, we integrate AI into our analysis workflows to assist our experts — not replace them. From anomaly detection to automated triage, our approach combines smart algorithms with seasoned human insight.

Best Practices for Using AI in Security

- Always validate AI findings with expert review before taking action.

- Use explainable AI models where possible to understand why a decision was made.

- Continuously update training datasets to reflect evolving threats.

- Monitor AI performance and false positive rates over time.

- Ensure data used in training and inference respects user privacy and compliance rules (e.g., GDPR).

Conclusion

AI in cybersecurity is not a silver bullet — but when used correctly, it can be a powerful tool in the defender’s arsenal. Its strengths lie in speed, scale, and pattern recognition. But it must be used with caution, transparency, and a solid understanding of its limits.

At Cyber Dream, we believe in an AI-assisted future — where technology and human expertise work together to build safer digital environments.